Homemade Ambilight

By Sam Scherf

Written December 12, 2021

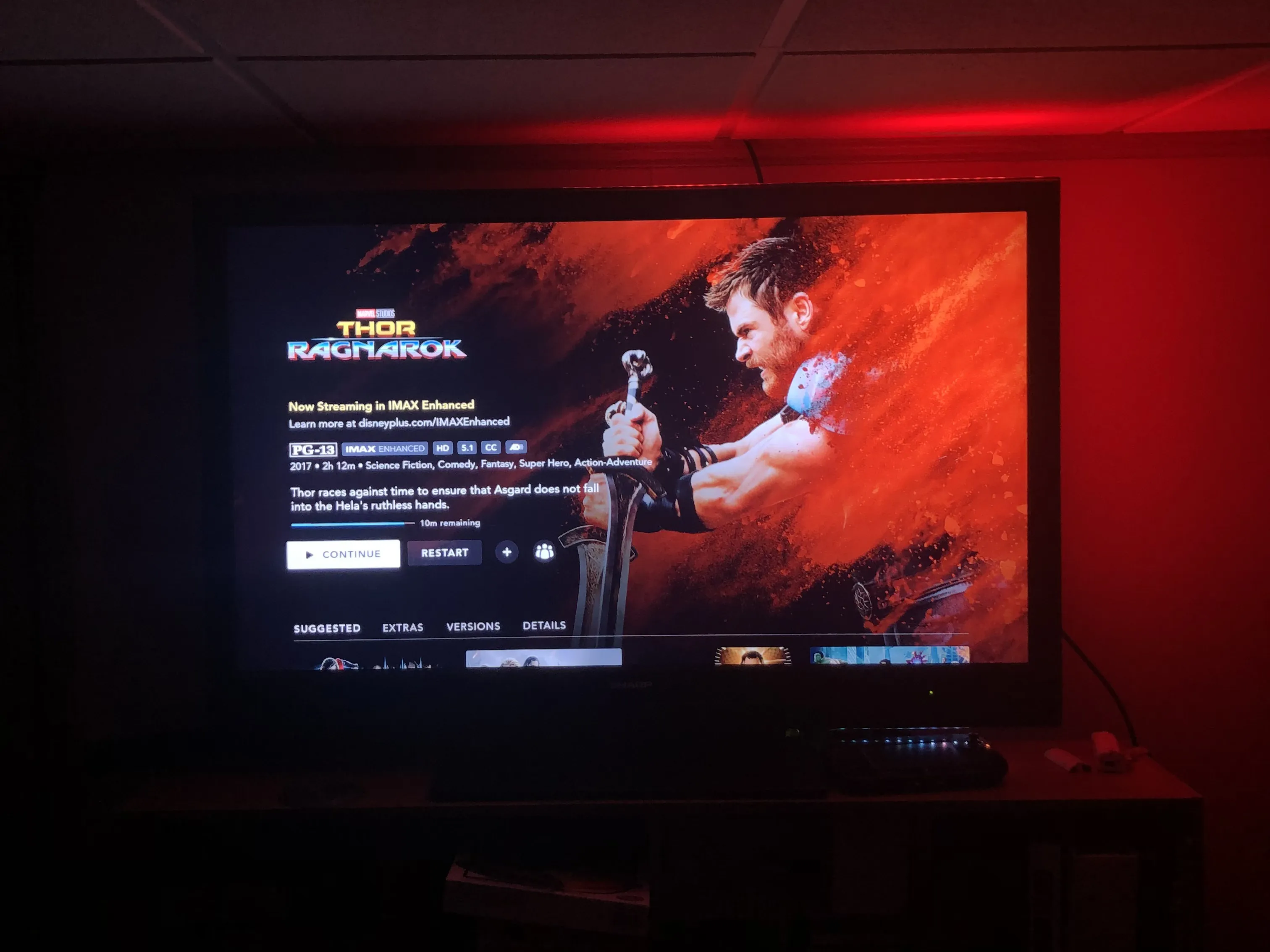

Once upon a time in early 2020 while scrolling on Reddit, I came across a post showing off an Ambient Lighting TV setup. I immediately fell in love with the idea and set out to install one on my TV. Ambient Lighting is a weak light source on the backside of a screen or monitor that illuminates the wall or surface behind and just around the display. While this can sometimes just be random colors, the most interesting use is when the lights are the same color as the edge of the screen as seen in the above picture.

The Theory

To do this, the LED controller must have access to the video on the screen and then process it accordingly. Thankfully there is a lot of open source projects focused to do this. These programs come in two categories. The first of which is having a small camera on the top of your TV that is pointed down at the TV screen. This can be bought as an all-in-one no setup required package but I wanted something cleaner so I opted for option two. That option being to use a video cable splitter and feed the video into a real-time capture card. The biggest challenge with this method is dealing with DMCA standards which prevent capture cards from capturing most signals (like TV or Netflix).

My Implementation

The first thing I did was use some double-sided tape to secure some extra LEDs I had from an old project around the perimeter of my TV. These LEDs were then hooked up to a 100W power supply (Yes overkill but I had an extra one laying around) and an Arduino which was, in turn, plugged into a Raspberry Pi. All of this was mounted behind the TV which is standing on a shelf next to a wall. To capture the video signal, I placed a 3x1 HDMI splitter next to the TV for all my family's appliances. The output of this was fed into a 2x1 HDMI splitter with one output going to the TV and the other going to an HDMI to component cable adapter. These components cables were finally fed into a component cable capture plugged into the Raspberry Pi (component cables don't support DMCA so any signal can be captured). The resulting video was very low quality (as one would expect) but that didn't matter since all I needed was the general color of the edge pixels. This was trivial using Hyperion-NG which allowed me to control everything via a local webserver hosted on the raspberry pi.